- Algorithm Time Complexity Cheat Sheet

- Time Complexity Cheat Sheet Python

- Sorting Time Complexity Cheat Sheet

- Big O Time Complexity Chart

- Time Complexity Calculator Online

The first has a time complexity of O(N) and the latter has O(1). Python Regex Cheat Sheet. Writing to an excel sheet using Python. Know Thy Complexities! This webpage covers the space and time Big-O complexities of common algorithms used in Computer Science. When preparing for technical interviews in the past, I found myself spending hours crawling the internet putting together the best, average, and worst case complexities for search and sorting algorithms so that I wouldn't be stumped when asked about them.

Common Data Structure Operations

| Data Structure | Time Complexity | Space Complexity | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Average | Worst | Worst | |||||||

| Access | Search | Insertion | Deletion | Access | Search | Insertion | Deletion | ||

| Array | Θ(1) | Θ(n) | Θ(n) | Θ(n) | O(1) | O(n) | O(n) | O(n) | O(n) |

| Stack | Θ(n) | Θ(n) | Θ(1) | Θ(1) | O(n) | O(n) | O(1) | O(1) | O(n) |

| Queue | Θ(n) | Θ(n) | Θ(1) | Θ(1) | O(n) | O(n) | O(1) | O(1) | O(n) |

| Singly-Linked List | Θ(n) | Θ(n) | Θ(1) | Θ(1) | O(n) | O(n) | O(1) | O(1) | O(n) |

| Doubly-Linked List | Θ(n) | Θ(n) | Θ(1) | Θ(1) | O(n) | O(n) | O(1) | O(1) | O(n) |

| Skip List | Θ(log(n)) | Θ(log(n)) | Θ(log(n)) | Θ(log(n)) | O(n) | O(n) | O(n) | O(n) | O(n log(n)) |

| Hash Table | N/A | Θ(1) | Θ(1) | Θ(1) | N/A | O(n) | O(n) | O(n) | O(n) |

| Binary Search Tree | Θ(log(n)) | Θ(log(n)) | Θ(log(n)) | Θ(log(n)) | O(n) | O(n) | O(n) | O(n) | O(n) |

| Cartesian Tree | N/A | Θ(log(n)) | Θ(log(n)) | Θ(log(n)) | N/A | O(n) | O(n) | O(n) | O(n) |

| B-Tree | Θ(log(n)) | Θ(log(n)) | Θ(log(n)) | Θ(log(n)) | O(log(n)) | O(log(n)) | O(log(n)) | O(log(n)) | O(n) |

| Red-Black Tree | Θ(log(n)) | Θ(log(n)) | Θ(log(n)) | Θ(log(n)) | O(log(n)) | O(log(n)) | O(log(n)) | O(log(n)) | O(n) |

| Splay Tree | N/A | Θ(log(n)) | Θ(log(n)) | Θ(log(n)) | N/A | O(log(n)) | O(log(n)) | O(log(n)) | O(n) |

| AVL Tree | Θ(log(n)) | Θ(log(n)) | Θ(log(n)) | Θ(log(n)) | O(log(n)) | O(log(n)) | O(log(n)) | O(log(n)) | O(n) |

| KD Tree | Θ(log(n)) | Θ(log(n)) | Θ(log(n)) | Θ(log(n)) | O(n) | O(n) | O(n) | O(n) | O(n) |

Array Sorting Algorithms

| Algorithm | Time Complexity | Space Complexity | ||

|---|---|---|---|---|

| Best | Average | Worst | Worst | |

| Quicksort | Ω(n log(n)) | Θ(n log(n)) | O(n^2) | O(log(n)) |

| Mergesort | Ω(n log(n)) | Θ(n log(n)) | O(n log(n)) | O(n) |

| Timsort | Ω(n) | Θ(n log(n)) | O(n log(n)) | O(n) |

| Heapsort | Ω(n log(n)) | Θ(n log(n)) | O(n log(n)) | O(1) |

| Bubble Sort | Ω(n) | Θ(n^2) | O(n^2) | O(1) |

| Insertion Sort | Ω(n) | Θ(n^2) | O(n^2) | O(1) |

| Selection Sort | Ω(n^2) | Θ(n^2) | O(n^2) | O(1) |

| Tree Sort | Ω(n log(n)) | Θ(n log(n)) | O(n^2) | O(n) |

| Shell Sort | Ω(n log(n)) | Θ(n(log(n))^2) | O(n(log(n))^2) | O(1) |

| Bucket Sort | Ω(n+k) | Θ(n+k) | O(n^2) | O(n) |

| Radix Sort | Ω(nk) | Θ(nk) | O(nk) | O(n+k) |

| Counting Sort | Ω(n+k) | Θ(n+k) | O(n+k) | O(k) |

| Cubesort | Ω(n) | Θ(n log(n)) | O(n log(n)) | O(n) |

When measuring the efficiency of an algorithm, we usually take into account the time and space complexity. In this article, we will glimpse those factors on some sorting algorithms and data structures, also we take a look at the growth rate of those operations.

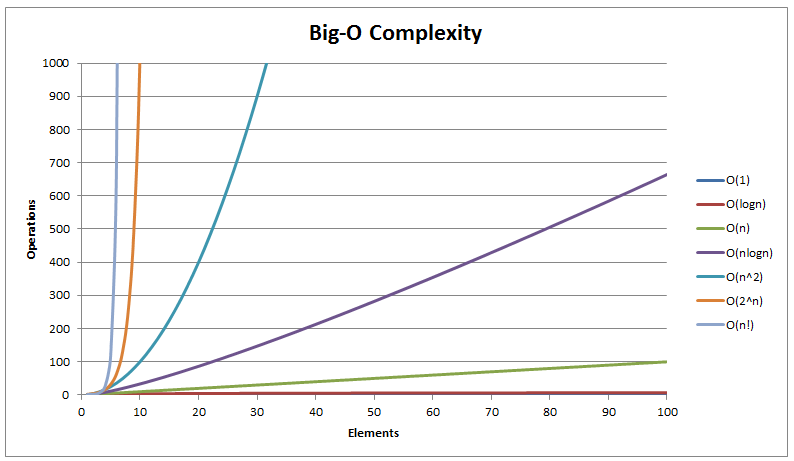

Big-O Complexity Chart

First, we consider the growth rate of some familiar operations, based on this chart, we can visualize the difference of an algorithm with O(1) when compared with O(n2). As the input larger and larger, the growth rate of some operations stays steady, but some grow further as a straight line, some operations in the rest part grow as exponential, quadratic, factorial.

Sorting Algorithms

Algorithm Time Complexity Cheat Sheet

In order to have a good comparison between different algorithms we can compare based on the resources it uses: how much time it needs to complete, how much memory it uses to solve a problem or how many operations it must do in order to solve the problem:

- Time efficiency: a measure of the amount of time an algorithm takes to solve a problem.

- Space efficiency: a measure of the amount of memory an algorithm needs to solve a problem.

- Complexity theory: a study of algorithm performance based on cost functions of statement counts.

| Sorting Algorithms | Space Complexity | Time Complexity | ||

| Worst case | Best case | Average case | Worst case | |

Bubble Sort | O(1) | O(n) | O(n2) | O(n2) |

| Heapsort | O(1) | O(n log n) | O(n log n) | O(n log n) |

| Insertion Sort | O(1) | O(n) | O(n2) | O(n2) |

| Mergesort | O(n) | O(n log n) | O(n log n) | O(n log n) |

| Quicksort | O(log n) | O(n log n) | O(n log n) | O(n log n) |

| Selection Sort | O(1) | O(n2) | O(n2) | O(n2) |

| ShellSort | O(1) | O(n) | O(n log n2) | O(n log n2) |

| Smooth Sort | O(1) | O(n) | O(n log n) | O(n log n) |

| Tree Sort | O(n) | O(n log n) | O(n log n) | O(n2) |

| Counting Sort | O(k) | O(n + k) | O(n + k) | O(n + k) |

| Cubesort | O(n) | O(n) | O(n log n) | O(n log n) |

Data Structure Operations

In this chart, we consult some popular data structures such as Array, Binary Tree, Linked-List with 3 operations Search, Insert and Delete.

| Data Structures | Average Case | Worst Case | ||||

| Search | Insert | Delete | Search | Insert | Delete | |

| Array | O(n) | N/A | N/A | O(n) | N/A | N/A |

| AVL Tree | O(log n) | O(log n) | O(log n) | O(log n) | O(log n) | O(log n) |

| B-Tree | O(log n) | O(log n) | O(log n) | O(log n) | O(log n) | O(log n) |

| Binary SearchTree | O(log n) | O(log n) | O(log n) | O(n) | O(n) | O(n) |

| Doubly Linked List | O(n) | O(1) | O(1) | O(n) | O(1) | O(1) |

| Hash table | O(1) | O(1) | O(1) | O(n) | O(n) | O(n) |

| Linked List | O(n) | O(1) | O(1) | O(n) | O(1) | O(1) |

| Red-Black tree | O(log n) | O(log n) | O(log n) | O(log n) | O(log n) | O(log n) |

| Sorted Array | O(log n) | O(n) | O(n) | O(log n) | O(n) | O(n) |

| Stack | O(n) | O(1) | O(1) | O(n) | O(1) | O(1) |

Time Complexity Cheat Sheet Python

Growth of Functions

The order of growth of the running time of an algorithm gives a simple characterization of the algorithm’s efficiency and also allows us to compare the relative performance of alternative algorithms.

Sorting Time Complexity Cheat Sheet

Below we have the function n f(n) with n as an input, and beside it we have some operations which take input n and return the total time to calculate some specific inputs.

Big O Time Complexity Chart

Time Complexity Calculator Online

| n f(n) | log n | n | n log n | n2 | 2n | n! |

|---|---|---|---|---|---|---|

| 10 | 0.003ns | 0.01ns | 0.033ns | 0.1ns | 1ns | 3.65ms |

| 20 | 0.004ns | 0.02ns | 0.086ns | 0.4ns | 1ms | 77years |

| 30 | 0.005ns | 0.03ns | 0.147ns | 0.9ns | 1sec | 8.4×1015yrs |

| 40 | 0.005ns | 0.04ns | 0.213ns | 1.6ns | 18.3min | — |

| 50 | 0.006ns | 0.05ns | 0.282ns | 2.5ns | 13days | — |

| 100 | 0.07 | 0.1ns | 0.644ns | 0.10ns | 4×1013yrs | — |

| 1,000 | 0.010ns | 1.00ns | 9.966ns | 1ms | — | — |

| 10,000 | 0.013ns | 10ns | 130ns | 100ms | — | — |

| 100,000 | 0.017ns | 0.10ms | 1.67ms | 10sec | — | — |

| 1’000,000 | 0.020ns | 1ms | 19.93ms | 16.7min | — | — |

| 10’000,000 | 0.023ns | 0.01sec | 0.23ms | 1.16days | — | — |

| 100’000,000 | 0.027ns | 0.10sec | 2.66sec | 115.7days | — | — |

| 1,000’000,000 | 0.030ns | 1sec | 29.90sec | 31.7 years | — | — |